The Challenge

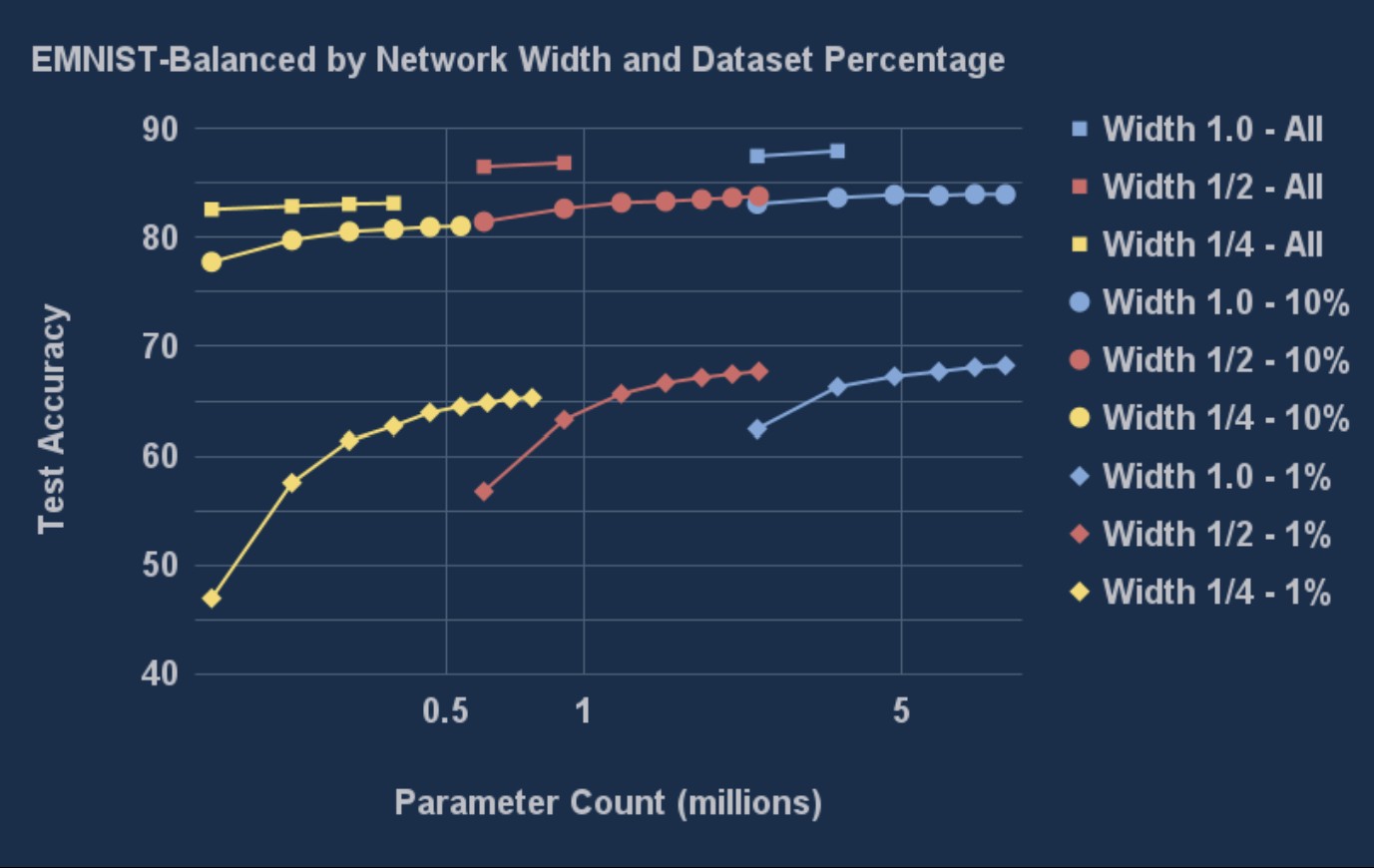

The EMNIST-Balanced dataset contains handwritten letters and numbers with visually similar classes merged together. We tested performance under severe data restrictions, reducing the original 112,800 training images down to 11,200 (10%) and 1,200 (1%) to simulate scenarios where labeled data is scarce.

The Results

Perforated BackpropagationTM showed increasingly powerful benefits as data became more restricted. With the full dataset, it achieved 4% error reduction. With only 1% of the data (1,200 images), error reduction jumped to 18.3%, proving dendrites are particularly effective when training data is limited.

The compression results were equally striking. With 1% restricted data, Perforated Backpropagation TM maintained accuracy with just 16% of the original parameters. With 10% restricted data, accuracy was maintained with 50% of the parameters.

Real-World Impact

These improvements are critical for edge deployment, mobile applications, and scenarios where labeled data is expensive or difficult to obtain. Better performance with fewer parameters and limited training data enables ML deployment in resource-constrained environments, from IoT devices to applications in developing regions.

Ready to Optimize Your Models?

See how Perforated BackpropagationTM can improve your neural networks

Get Started Today