When we tackled the Carvana Image Masking Challenge, one of Kaggle's most competitive computer vision competitions, we faced a problem: The baseline U-Net model was already nearly perfect.

But "nearly perfect" still leaves room for innovation.

The Challenge

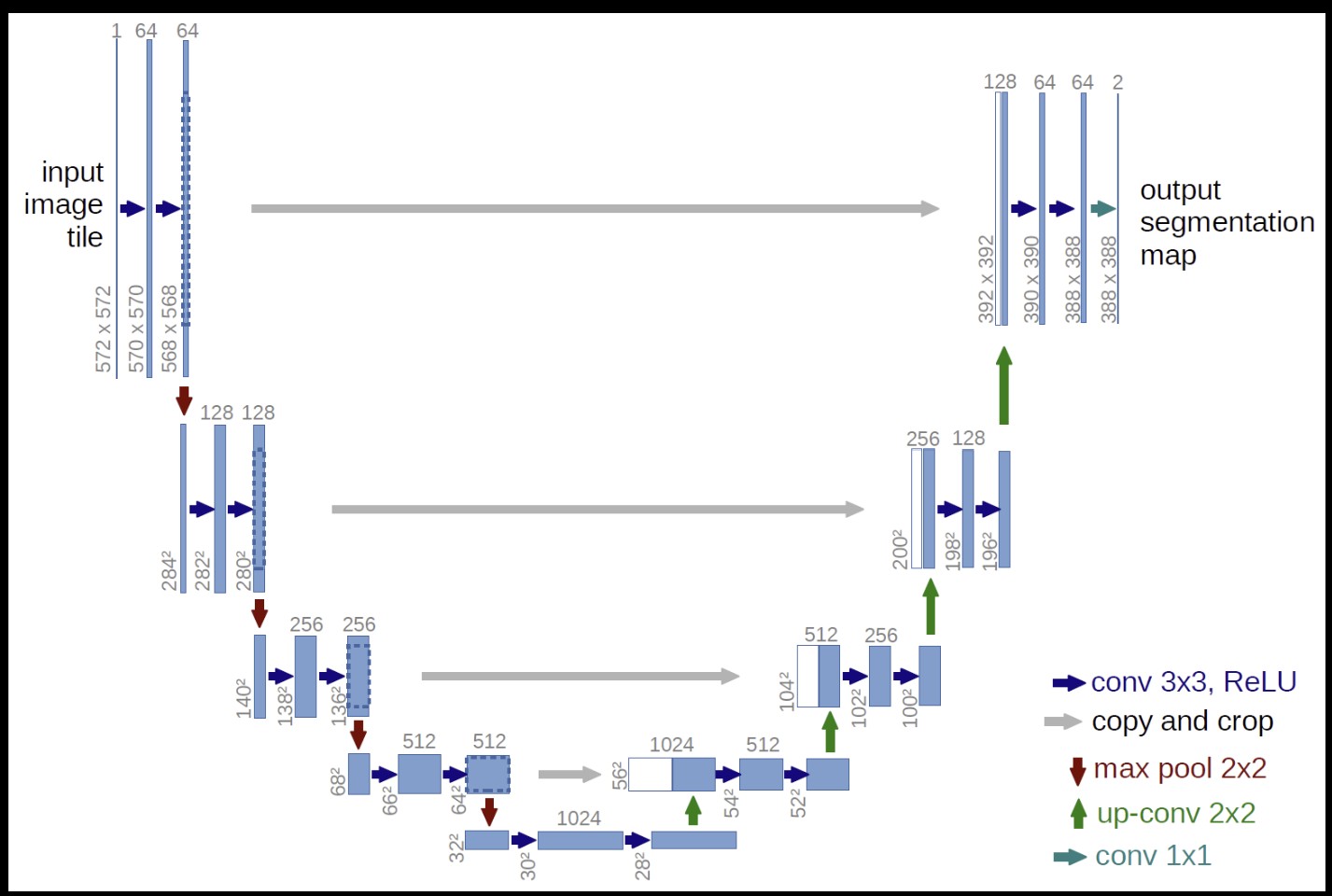

The Carvana Image Masking Challenge required automatically removing backgrounds from car images with pixel-perfect precision for their online marketplace. We used U-Net, the gold standard for image segmentation.

After years of optimization by thousands of data scientists worldwide, squeezing out improvements seemed nearly impossible. When models already perform at 95%+ accuracy, every additional percentage point becomes exponentially harder. That's the wall computer vision keeps hitting: exceptional performance but not quite good enough for critical applications in healthcare, autonomous driving, or high-stakes visual AI.

The Breakthrough

By integrating Perforated BackpropagationTM with the U-Net architecture, we achieved something remarkable: an incremental 35% reduction in the remaining error on Dice score.

This wasn't an improvement on a mediocre baseline. This was taking an already elite-performing model and making it dramatically better on a benchmark that had been studied and optimized by some of the world's best computer vision researchers.

Why This Matters

More precise image segmentation means:

- E-commerce platforms can automate product photography without expensive manual editing

- Medical imaging systems can detect tumors and anomalies with greater accuracy

- Autonomous vehicles can better understand their environment in real-time

- Manufacturing and quality control can catch defects that human eyes might miss

When the difference between 95% and 98% accuracy can mean life or death in medical diagnostics, or the difference between a car correctly identifying a pedestrian or not, these improvements aren't just impressive. They're essential.

The Bottom Line

Computer vision has been one of AI's greatest success stories, but it's also been constrained by incremental gains on mature architectures. Perforated BackpropagationTM shows there's still massive room for improvement, even on problems we thought were nearly solved.

Ready to Optimize Your Models?

See how Perforated BackpropagationTM can improve your neural networks

Get Started Today